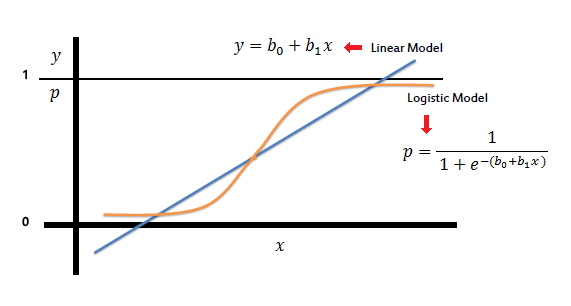

Logistic regression is very similar to linear regression As in both algorithms ,models are trained to find a regression line to define function for futher prediction.Therefore, It's a form of supervised learning, which tries to predict the responses of unlabeled, unseen data by first training with labeled data,on a set of observations which consists of both independent (X) and dependent (Y) variables. But while <ahref='https://guide.freecodecamp.org/machine-learning/linear-regression'target='_blank'>Linear Regression</a> assumes that the response variable (Y) is quantitative or continuous, Logistic Regression is used specifically when the response variable is qualititative,or discrete.<br>

#### How it Works

Logistic regression models the probability that Y, the response variable, belongs to a certain category. In many cases, the response variable will be a binary one, so logistic regression will want to model a function y = f(x) that outputs a normalized value that ranges from, say, 0 to 1 for all values of X, corresponding to the two possible values of Y. It does this by using the logistic function:

Logistic regression is the appropriate regression analysis to conduct when the dependent variable is dichotomous (binary) But it has another form such as : mutivalued logistic regression which is used to classify for more than two classes. Like all regression analyses, the logistic regression is a predictive analysis. Logistic regression is used to describe data and to explain the relationship between one dependent binary variable and one or more nominal, ordinal, interval or ratio-level independent variables.

Logistic regression is used to solve classification problems, where the output is of the form y∈{0,1}. Here, 0 is a negative class and 1 is a positive class. Let's say we have a hypothesis hθ(x), where x is our dataset(a matrix) of length m. θ is the parameteric matrix. We have : 0 <hθ(x)<1

In Logistic regression, hθ(x) is a sigmoid function, thus hθ(x) = g(θ'x).

Where hθ(x) is = hypothetic value calculated in accordance with attributes and wieghts which are calculated and balanced via algorithm such as gradient decent .

y = is the corresponding value from observation data set

Here cost function is not a proper sigmoid function in use but in place ,two log functions which performs with greater efficieny without

Logistic regression models the probability of the default class(i.e. the first class).

You can classify results given by :

y = e^(b0 + b1*X) / (1 + e^(b0 + b1*X))

into two classes.Like for sigmoid function 0.5 is set as the decision boundary all x for which y≥0.5 are classified as class A and for which y<0.5areclassifiedasclassB.

#### Multi class logistic regression:

Although you will see logistic regression usually being used in case of binary classification but you can also use it in case of classification into multiple classes by:

##### one vs one method:

Here a classifier for each class is created separately and the classifier with the highest score is considered as output.

##### one vs all method:

Here multiple(N*N(N-1)/2 where N=no. of classes) binary classifiers are made and then by comparing their scores the output is obtained.

Binary logistic regression requires the dependent variable to be binary.

For a binary regression, the factor level 1 of the dependent variable should represent the desired outcome.

Only the meaningful variables should be included.

The independent variables should be independent of each other. That is, the model should have little or no multicollinearity.

The independent variables are linearly related to the log odds.

Logistic regression requires quite large sample sizes.

#### More Information:

<!-- Please add any articles you think might be helpful to read before writing the article -->

For further reading to build logistic regression step by step :

- Click <ahref="https://medium.com/towards-data-science/building-a-logistic-regression-in-python-step-by-step-becd4d56c9c8"target='_blank'rel='nofollow'>here</a> for an article about building a Logistic Regression in Python.

- Click <ahref="http://nbviewer.jupyter.org/gist/justmarkham/6d5c061ca5aee67c4316471f8c2ae976"target='_blank'rel='nofollow'>here</a> for another article on building a Logical Regression.

- Click <ahref="http://nbviewer.jupyter.org/gist/justmarkham/6d5c061ca5aee67c4316471f8c2ae976"target='_blank'rel='nofollow'>here</a> for another article on mathematics and intuition behind Logical Regression.